Francesco Innocenti presents best paper for ICML Workshop on Localized Learning (LLW)

Francesco Innocenti’s submission for the ICML 2023 Workshop on Localized Learning (LLW) won ‘best contributed paper’. He presented the paper ‘Understanding Predictive Coding as a Second-Order Trust-Region Method’, written with Ryan Singh and Christopher Buckley, at the workshop in Hawai this week.

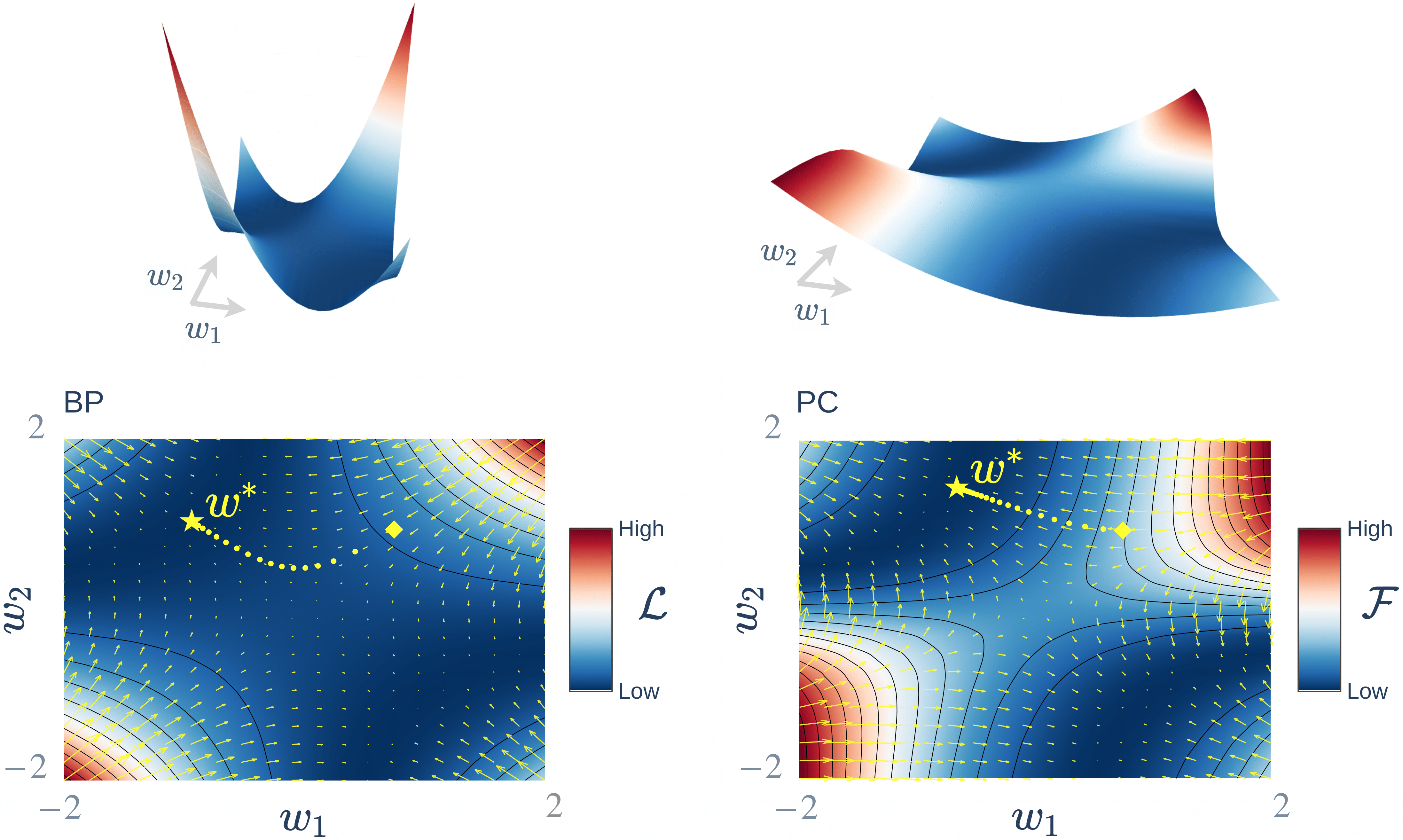

The paper examines Predictive coding (PC) as a brain-inspired algorithm that has recently been suggested to provide advantages over backpropagation (BP) in biologically relevant scenarios. While theoretical work has mainly focused on showing how PC can approximate BP in various limits, the putative benefits of “natural” PC are less understood. The paper develop a theory of PC as an adaptive trust-region (TR) algorithm that uses second-order information. This work shows that the learning dynamics of PC can be interpreted as interpolating between BP’s loss gradient direction and a TR direction found by the PC inference dynamics.

Our theory suggests that PC should escape saddle points faster than BP, a prediction which we prove in a shallow linear model and support with experiments on deeper networks. —Francesco innocenti

This work lays a foundation for understanding PC in deep and wide networks.

The ICML Localized Learning Workshop aims to overcome the limitations of global end-to-end learning, delving into the fundamentals of localized learning, which is broadly defined as any training method that updates model parts through non-global objectives.

For more information, see this blog post.